Interesting to know is that the Netapp multipath only permits 2 paths per LUN on an "active/passive" basis. The system auto balances primary paths for each LUN on different HBAs. As a fan of math and symmetry, this 2 path limitation challenged the feng shui of my SAN zoning model since each T800 has 4 nodes. I was able to satify my OCD by zoning each Netapp to both T800s, 2 paths to each, 4 per node, 8 total system wide, one path to each 3PAR node.

My storage plan is Filer A will build all it's aggregates from LUNs on T800-1, while Filer B does the same with T800-2. Since the Filers build raid arrays from the LUNs assigned, we opted for raid-0 since the 3PAR is already handling data protection (Raid 5+1 disk shelf survivability). For that reason, I prefer that all raid-0 arrays do not span storage frames to minimize points of failure.

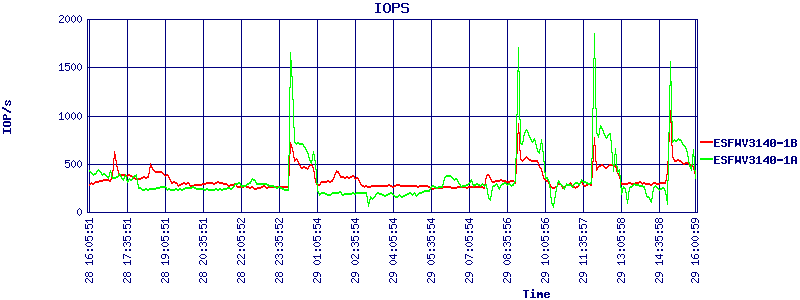

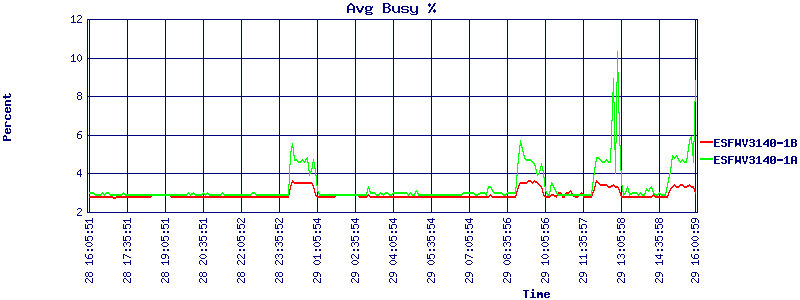

Performance wise, the Netapp's appear to be very chatty on the fibre constantly streaming 300~400 iops of 8k blocks all day, every day. This is odd because I know the data that currently resides on the new hardware is typically idle and only accessed by a few IT users (we've halted all migrations until we can patch the Netapps). This has me suspicious of some a background disk scrub routine or other type of maintenence enabled on the Netapp that I need to discover and review. Charts below show trafic as monitored by 3Par System Reporter "High-Res" collection interval for the past 24 hours.

Constant 3% utilization of the LUNs assigned to the Netapp, a steady never ending stream of IOps. The spikes represent my snapshot cycles on the Filers, as a new snapshot is created the old 30 day old one is getting deleted/purged. Go WAFL? Hopefully I can locate and disable the cause of this. If not, I may move the Netapp to dedicated ports on the 3PAR hardware... currently I have them zoned to ports shared by Windows servers.